If you want to know the secret to a great customer-brand relationship, it’s A/B testing. Everything that comes before your customers must be tested – be it your landing pages, website, email, website templates, ad concepts, or color palette. If you want the best (and accurate) results, A/B testing statistics is the best way to it.

Let’s put it this way. A third opinion is always helpful. Suppose you are planning to buy a house. You will ask several friends who have recently bought one, you will look several websites to find the best deal, and you will obviously take opinions on whether you should buy a particular house after you have almost zeroed on one! (Psst… Did you know about real estate marketing automation designed to give you more personalized search results? Just saying!)

Now put this theory in your business too. If you are launching your product without finding out if your target audience will really benefit from it, you are risking your entire product. What if you launch your product because you thought it was useful, but your target audience reject it outright? You wouldn’t want that, right?

Assuming that you know everything about your target market is the first step to your pitfall. Customer demands are changing every alternate day; simultaneously new solutions are cropping up at the same pace. Instead of mass rejection, it is better to test your idea with a handful of reliable prospects that represent your ideal customers. And this is true not just while launching your product. It stands true with every other marketing effort that you will do after that.

Data-driven ideas always work. But sometimes, while doing A/B testing analysis, you end up either over-doing or under-doing it. And many other times you might end up missing out on subtle changes while focusing on making it big. There are many such common A/B testing errors that everyone makes without knowing about it.

15 A/B testing Errors and How to Avoid Them

You need conversions, so you are doing A/B testing analytics. That’s the fact. So, to have better results, you can always avoid some of these common A/B testing errors. Below are 15 such errors and what you can do about them.

1. You tested for a long, long time

Yes. That’s right. You can run an A/B test design for a long time, and that can genuinely ruin your website and your time. If you are stretching your A/B test for too long, you are killing opportunities for yourself.

Imagine you are running an A/B split test for more than three months and only at the end of the fourth month you see numbers that you expected. But by this time you could have run hundred more tests. In an attempt to reach statistical significance, you wasted too much time.

The Other Alternative?

You must be aware of what will lead to statistical significance.

Still Not an User of Aritic PinPoint Automation?

I’ve repeated ‘Statistical Significance’ so many times already. What’s that?

To put it simply, Statistical Significance indicates whether the obtained result is by chance or by facts. The higher the percentage, the better it indicates that the result is factual.

So, how can I be aware of what I need to get proper results?

There are two ways to do this-

1. Keep a tab on the statistical significance on a daily basis. And do this for some time.

2. Sketch out a proper plan and start reverse.

Why Reverse?

You see the first option brings you back right where you began. You can end up ‘waiting’ forever. Some tools give results after 100 visits, which is lame. Some go up to get 100,000 visits. None of these are bound by time, which means the second method is more appropriate.

Coming to ‘why reverse’, when you have a goal set it becomes easier to work. Let’s see how.

Setting the plan:

You can start off by checking off few metrics like

- What is your current conversion rate?

- How much improvement do you expect?

- How many variables will you test?

- What is your per day number of visitors to that particular page you want to test?

- Exactly what portion of your audience will see this test?

Executing:

Now that you have the facts before you, using an A/B testing tools can help. You can try Aritic PinPoint‘s A/B testing feature too. Just saying! Why hunt for another tool when you can do all from one.

So, as I was saying – With these metrics, your A/B testing ux tool will help you get an estimate of the number of days you need to run the test to reach the statistical significance.

Now you have set an end-goal for your tests. You know what to do to get to that outcome. What follows next is setting up your tests according to the end result that you want.

2. You called it off pretty early

While stretching your A/B test for too long can ruin your website, the opposite stands true as well. You might think I am trying to confuse you – sometimes I say don’t stretch long, other times I am asking you to not to call off early. But seriously, if you are pulling the plug very early you are missing out on genuine results.

Did you ever run an A/B test that showed great results in two days? And then you got so happy that you immediately clicked on ‘Stop Test’ button? Yeah! We all have been there.

But don’t do that. If you are switching the variants (the changes you are testing) very early, you might actually lose out on your conversions.

The Other Alternative:

It’s same as avoiding running A/B test for a long time. Put your focus on attaining the statistical significance and work backward. Try to get your tests above 95% mark before you close it down.

3. You’ve set up your test wrong

I’m sure you’ve done few A/B testing examples already before you started reading this post. Has it ever happened to you that you have two variables with almost identical traffic but oddly the statistical significance has a huge gap? Like one is 0.35% and the other is 100%? You might have noticed that the conversion rates are also almost similar but not the statistical significance.

If this has happened to you, then probably you have set up your test wrong. Now go back to the time when you were setting up the test. You introduced a new variable while running the test. Agreed?

Well, chances are high that when a new variable is introduced while running a test, it will not touch the statistical significance.

Execute Effective Marketing Automation Workflows Now

But the one without any new variables is performing great. Can’t I rely on that?

Nope. If you are taking into account only one reading, it is not A/B testing. Even if it shows a 100% reading, it is still not properly tested.

The Other Alternative:

I had mentioned in the beginning that Statistical Significance accounts the validity of your A/B testing (read: current test). When you introduce a new variable, it becomes a new test.

If you wish to test against various variables, you will need to start an A/B test from scratch for each variable or do end-to-end testing ensuring that application flow is in line with the intended outcome. On doing this, you will have more clarity in understanding which version of your test will give you your desired results. Also, you will know exactly ‘which variant’ is giving you that result.

4. You focussed on testing insignificant things

Testing all variants is great, but when I say ‘all’ it isn’t necessary that you put your focus on every single item or design element. Take a look at the search results when I Googled ‘Facebook Image Size’:

There are many resources available on the exact measurements. When I am testing my social media ad specifically for Facebook, I need not focus on changing the size of my image. I already have all the information with me.

If you start spending time on A/B testing every single aspect, then you will miss out on testing things that are more pivotal in increasing your conversions.

The Other Alternative:

There are so many other things that you MUST test. Here are 5 major things to test (always):

#1 Headline: David Ogilvy said writing a headline is equivalent to spending eighty cents out of your dollar. That sums up the importance of headlines in increasing conversions.

#2 Call To Action: While you will get a lot of content on what color can cause more conversions, you might want to test the CTA content and placement.

#3 Pricing: Testing your pricing page is essential (as much scary as it is).

#4 Images: Images have the potential to speed up your conversions. It catches attention instantly and conveys a non-written message effectively. Testing your images can be highly beneficial.

#5 Product Description: This can be any text that describes your product features. It can be long or short. You need to test the length and also choose your words carefully to understand what connects with your target audience.

5. You did not have enough traffic, but you went ahead with A/B Testing

Let’s say you have just launched your product and you have just handful of beta users. You decide to run a test. After running the test for two days, you notice version B is showing better results than version A.

However since your traffic is very low it is not possible to understand why or how. Probably you will need over 5 months to reach the statistical significance. We already discussed, waiting that long is similar to wasting time, money and energy.

The Other Alternative:

Since your traffic is low, it is better to stick with version B. Let’s say you have 2-3 sales per month. With this number, if one version is showing better results, you always opt for that version. Rather than waiting for months to reach statistical significance, first focus on increasing your traffic to a minimum number.

6. You’ve generalized your results

Another common error even experts make.

Imagine you run an A/B test and your variant shows a 35% increase in conversions against the control.

Hola! You just found the secret to increased conversions. Now you start repeating this EVERYWHERE. Nice, isn’t it?

Fast-forward few weeks ahead, you start seeing a site-wide dip in your conversion rates. You’re bewildered because you had used the same formula that gave you success. Turns out that those results were entirely test-specific, and so was the conversion increase.

Your mistake? You generalized your tests and implemented it site-wide.

Although it would have been a lot easier if you could apply the same design, wording, CTA, and all of the other elements everywhere, you cannot.

Actually, you SHOULDN’T.

The Other Alternative:

Making a site-wide change must not be an instant decision. It is more like a gradual process. Once you set up a new test on a new page, wait to see if the big conversions are actually happening. Then, repeat. Period.

If this change is worth applying site-wide, it will show results with time. Don’t jump without testing.

7. You ignored failures

Did I mention that failures in A/B testing are as common as these errors that we keep making? Yes. It can take you more than one test to get the maximum conversions.

The other Alternative:

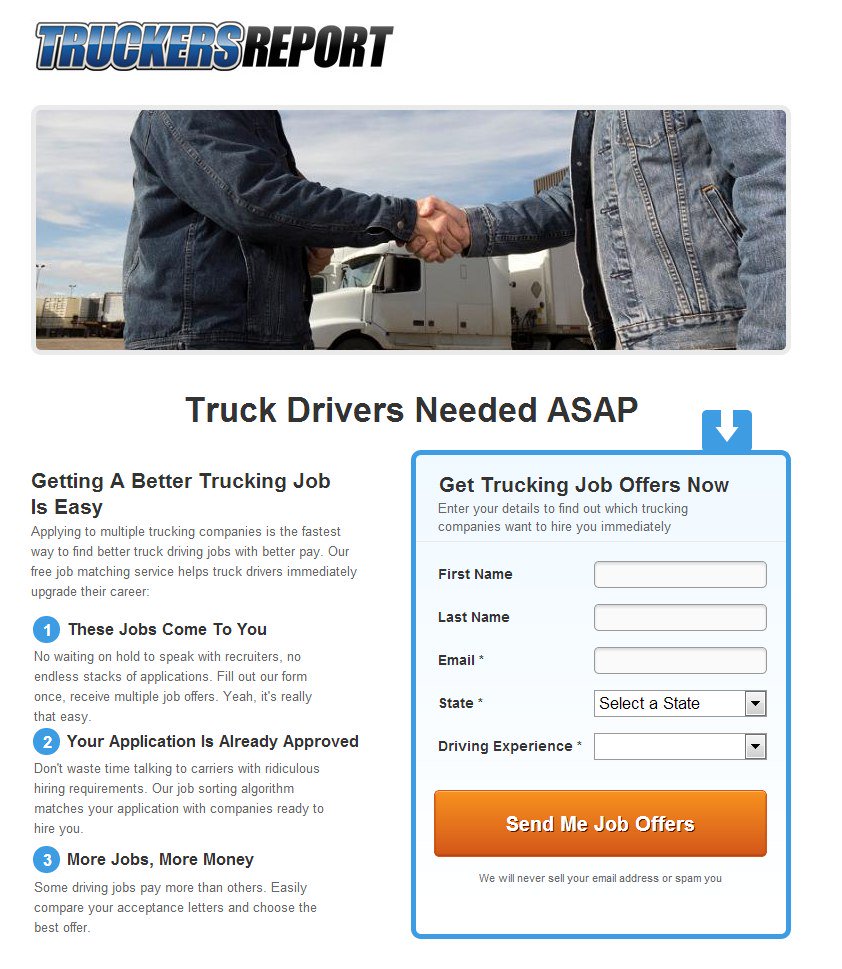

Did you know TruckersReport, a network of professional truck drivers, took 6 rounds of tests of their landing page until they saw a variation performing 79.3% better than the other variation? Below is the landing page they had before they started the test-

This landing page had a 12.1% conversion (email opt-ins). This was followed by a 4-step resume building. What followed next was a detailed analysis. Here are few observations they had-

- About 50% of their traffic was from smartphones. They needed a mobile responsive design.

- The headline did not indicate any benefit or addressed the pain point. A better headline was needed.

- ‘Handshake’ image was old-school and too common. A better and relatable image was needed.

- No credibility.

- Boring, amateur, and too basic design.

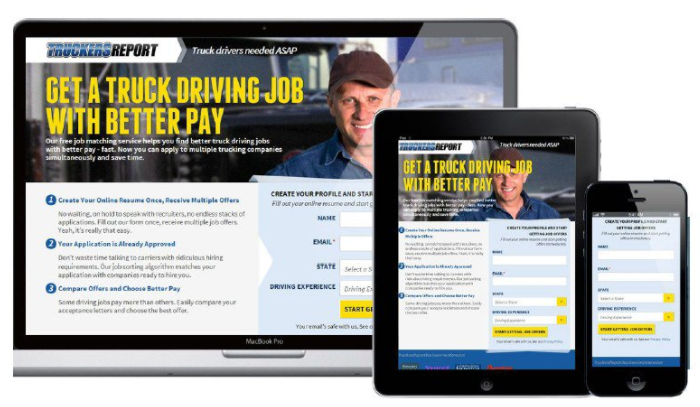

Merging these points with the three basic requirements of the drivers – better pay, benefits, and more personal time off – TruckersReport created a new landing page that looked like this:

And then they ran the tests. In total, they ran 6 tests with various hypothesis and controls. After that, they decided on the winning version. A quick overview of the tests:

Test #1

Hypothesis: Fewer form fields = lesser friction and more conversion.

Test #2

Hypothesis: Content addressing the pain points of truck drivers and using wordings commonly used by them (collected from a survey) resonates better with the target audience.

Test #3

Hypothesis: Original landing page vs new landing page

Test #4

Hypothesis: More Headlines testing = better conversion rates.

Test #5 & #6

Built a ‘simpler’ version of the page. How?

– New design with compact layout

– Keep only ’email’ field

– Remove ‘name’ field and place ’email’ field at the end

After so many tests, in the last attempt, they achieved a 21.7% conversion rate with no overlapping traffic. This was 79.3% better than the version they had started off with.

You can read the entire test summary here.

8. You opted for random hypothesis

Disclaimer: A/B testing is not based on ‘hypothesis’ that has no credibility.

I like cakes. But cake testing (throwing it on the wall to see if it sticks) is weird. Similarly, A/B testing with random ideas is weird and a drain of resources. You waste money, energy, and traffic. If you start testing with a random hypothesis which will never happen, your traffic will bid adieu in no-time. So will your money!

The other Alternative:

Before running A/B test, be clear about the hypothesis you are using. If you are not sure about the credibility of your hypothesis and in this situation, one version performs well, you are simply relying on ‘nothing’.

What can you do?

– Conduct proper research of your customers’ pain points

– Analyse the reason for this pain point

– Know what others are offering and why that isn’t sufficient

– What is it exactly that your target audience is looking for

– At which point on your website are your visitors quitting? Why?

Based on this information, create your hypothesis and then start off with A/B testing.

9. One page; Too many tests

A/B testing is a lot like gambling. You might lose few tests, but then you finally find a winner. And you’re so elated that you start launching more tests. One test after the after. Soon you have already done 20 tests and is seeing a steady conversion gain of 0.3%.

Can you relate to this scenario? Then you have already become a victim of ‘opportunity cost‘ and ‘local maximum‘.

What are these?

- Opportunity cost refers to an alternative that must be left in order to pursue another action. When you are spending that much time on one page, you are compromising on time reserved for optimising other pages.

- Local maximum is a commonly used designing term. It refers to the ultimate peak of your current design, a point from where there is no return. Whatever you do from this point will give you minute gains because you have already achieved as much as possible on that current design.

Take caution of Local Maximum. Any minute you start to see small returns on your page, you must assume that you have hit the local maximum.

The Other Alternative:

Be cautious. Know when your page has already hit the local maximum. Once it hits the bottom point, it’s time to move on.

Remember we talked about setting the goal for your A/B testing to know how long should you run your tests? Focus on your goal. The moment you see a dip from your trade-off value, you must move on.

10. One funnel; Too many tests

You have your sales path ready. You have set up the funnel flow, and accordingly, you have set up A/B tests for each page. Let’s say your sales funnel path looks like this-

Landing Page -> Product Page -> Pricing Page.

Perfect.

Now you set up two variants against the control for each page. So far so good.

Result: Your test results are all messed up. You don’t know what led to the increase or dip in your conversions, and why.

Why did this happen?

Okay, go back to the starting point. You have three pages- landing page which leads to the product page, which redirects to the pricing page. You have three versions of each page. That totals up to 27 pages.

Now, all these pages are part of your sales funnel – the SAME funnel. Hence, you have three versions of your landing page redirecting to three versions of the product page and further to the pricing page (again three versions). Imagine the mess now. Technically you have 27 different sales paths. Phew!

The Other Alternative:

Instead of juggling between 27 different sales paths, here are two things you can do:

1. Know which page falls under which funnel. Map out your sales flow accurately and record it for further reference.

2. Try the ‘reverse’ method. Start testing from the back of the funnel. That way it will not impact the other way round. If you are starting from the front of the funnel, the end result will get messed up. But by opting for reverse mode, you will have less to worry about, especially the variables. Your testing environment remains a lot more controlled this way.

11. You did not test your copy

I am sure you have heard this almost a zillion times – Content is KING.

True. Even when you are A/B testing, if you are testing with poor copy, you will not get more conversions.

Let’s say, you have picked up the perfect words to convince your target users. Now, only your Call-to-Action button text is left. You decide to test the CTA content and pick two alternatives: Enter vs Submit.

And you sit to see which one will move the needle more. But there’s a problem.

Both the words are interchangeable. Even if ‘Enter’ gave you little more results than ‘Submit’, what would that eventually prove?

Result: Your changes are not noteworthy and you won’t really understand what kind of content moved your target audience.

The Other Alternative:

Try using two different languages. Like not French and Spanish (unless you have a global audience and you are targeting country-specific users for your test). Like below:

The Control

Here we are testing two different languages. The variable is an outcome-based test where the users not only signs up but also signs up for traffic. The control has a more straightforward approach. In this test, even if the variable does not beat the control, you at least know that your users prefer a more straightforward approach in your CTAs. This information will help you in designing your future A/B Tests.

12. You focussed only on getting conversions

You might feel now Oh Common now, we are talking about A/B testing errors. Isn’t it obvious that the focus will be on getting conversions? That was the whole point of doing A/B testing.

I agree we started with A/B testing because we wanted to increase conversions. For the same reason, we also sat down to identify these common errors and resolve them. But again, I am saying too much focus on conversions can get your A/B testing messed up. Here’s why-

Let’s assume you have pricing model something like this:

- Basic plan: Free/month

- Premium: $49.99/month

You decrease the premium plan price to $20 and see a spike of 4% in your conversion rates.

Great, right? Your plan worked.

And then you take a closer look at the revenue generated from that page.

Yes. You guessed it right. Your revenue has seen a major dip and that’s bad!

A/B testing Lessons Learnt:

1. More conversions =/= More revenue.

2. Focus on conversions only is not the right approach to A/B testing.

The Other Alternative:

You must first see whether your total revenue is getting affected from your test. If it does, your change of pricing plan may not be a great idea. So, while conversions may increase, you might end up losing at least $100/day!

13. You’ve picked the wrong time to test

Now that we have come a long way discussing many common A/B testing errors, you might feel well-versed and your hands might sweat to start setting up a test NOW.

Awesome. But let’s take it a little slow.

Conducting an A/B test in an unfavourable condition may ruin all your excitement and enthusiasm with horrible results.

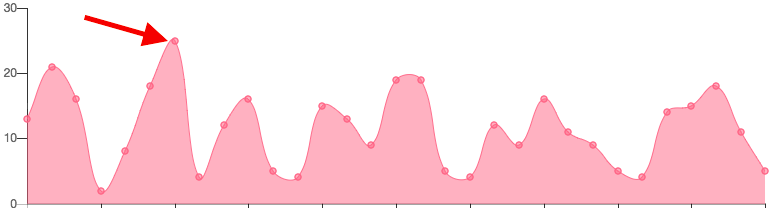

Assuming that your website has a good in-flow of traffic, you decide to test right here where your traffic has spiked up the most-

You decide to test here because your traffic is maximum at this point. Right?

WRONG.

A/B testing during spikes is not a great idea. Rather spikes refer to inconsistency in user behaviour. It indicates that users are reacting to something that is not a regular on your website.

The Other Alternative:

When you run a test, you must first find out where your spikes are and why. Try to take a look at your history to indicate a pattern, like a spike in traffic, happens during certain days of the year. Avoid those points. You need your A/B testing environment to be as controlled as possible.

14. You did not test everything

Did you think that swapping out an image from your current landing page will not make a difference?

You thought wrong.

Another common A/B testing error is to not test everything. Even if you are rearranging your homepage, you might see an increase in your conversion rates. While I’d say making insignificant changes means a waste of time, but ignoring element placements, copy quality, and templates can ruin your chances of increasing conversions.

I know you must be thinking I am contradicting my own points. Earlier I mentioned how wasting time on insignificant changes can ruin your chances of getting more conversion. Now I am saying test everything.

What do I mean?

Let’s say you are the CEO of your company. You feel adding an image to your homepage can boost your conversion rates. And thus, you add that new image. Your other teams are not involved or aware of this decision, you did not take word about the current sales funnel flow, and you are not even aware what other tests are running.

In another scenario, the entire team comes together to understand whether adding that image can really boost your conversion rates. Everyone is aware how a slight change can affect conversions.

At this point, your entire organization is committed to testing, and you are well aware that without running an A/B test you cannot know how it will impact the sales.

So, how is this related to A/B Testing Everything?

Since everyone in your organization is aware of the impact small changes can bring in conversion, no one will implement a change without testing it first. This way, everything goes through A/B testing rather than relying on the orders of the founding head!

15. You’re never satisfied with your results

Okay. We are done discussing all the factual errors we make in A/B testing. Now, some psychological error.

I have a friend who is never happy with any of her A/B testing results. Whether the win is of 0.5% or 50%, she is always like ‘Yeah I expected this’. But if the result is not as per her expectations, she starts whining about it and almost goes into depression!

That’s Weird. Don’t do that.

Every win, big or small, is awesome. Celebrate it with your team. That motivates everyone to do more and better. Else, one fine day you will run out of A/B tests and then you will get more depressed.

These are few errors that are very common while doing A/B testing. I’m sure you might have related to few errors from your past results (or maybe not, which is great).

Your views, please 🙂

If there are any other errors you saw people making, or you have identified some common flaws while doing A/B testing yourself, share it with us. Also, we’d love to hear how you achieved great results with A/B testing.

12 Comments

A/B testing is one of the easiest and most popular forms of conversion rate optimization (CRO) testing known to marketers as it improves their decision making capacity. I am, without doubt, going to keep in mind all the common mistakes that you have pointed out in this post before I start my test! Thanks for sharing.

This is a comprehensive and a very helpful blog, Madhu! A/B testing is actually fun, we can learn so much from every test and improve our email campaigns.

Hey, madhu! This is probably the best and most precise blog about A/B testing that I have come across! Thanks for sharing.

A very well written article, Madhu. Love the way how your tone experimental and guiding. Looking forward for more such posts from your side.

Hey Madhu, I have been following your work for a while now. Digital Marketing is not my strong suit but learning slowly and steadily with all the information you guys are providing.

Thanks a lot, Pritha to give the proper guidance in A/B testing as it helps us to target resources for maximum efficiency indirectly contributes to increase ROI.

It’s great blog! Pritha. You can learn a lot about A/B testing and improve our email campaigns.

A/B testing is one of the most accessible forms of conversion rate optimization (CRO) testing. Thanks for sharing.

A/B testing can help you to improve your email campaigns. Thanks a lot for updating this full blog.

Well -written Pritha, the guidance about A/B testing is essential because it will help improve your email campaigns.

Thanks for this thorough guide on A/B testing, since it is one of the most important factors for any business success.

Thanks for this thorough guide on A/B testing, since it is one of the most important factors for any business success.